Anatomy of test authoring & run infra

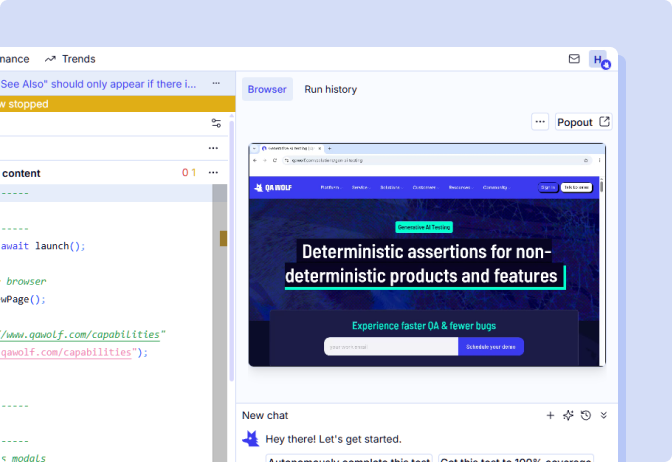

Flow automation editor

A browser-based workspace that brings together coding, version control, and real-time test execution so you can watch runs live, dig into logs, and keep your QA process transparent.

Containerized browser orchestration

High-resource, per-test containers run in parallel by default, keep environments isolated to prevent collisions, and eliminate flakes caused by shared resources.

Run infrastructure built for deep coverage at scale

High-Resource Test Containers

Run test suites in parallel without test collisions or cascading crashes

Instead of stacking tests on the same VM, QA Wolf spins up an ephemeral, high-resource container for each run. Reusing a versioned container build—and starting a fresh container on every retry—keeps environments clean, prevents collisions, and makes performance predictable.

Pre-warmed Containers

Start tests instantly instead of waiting on build servers

Pre-provisioned browsers and environments are always ready. When you trigger a run, tests bind to a warm container in milliseconds. The pool auto-expands with load and is refreshed on a rolling schedule, keeping startups consistent, even at peak traffic.

Run Rules

Turn real user journeys into reliable test flows

You indicate what runs first, what runs next, and which steps can run together—the platform builds the underlying DAG automatically. The runtime executes that plan while the Console shows live progress, retries, and timeouts. It handles sequencing and safe parallelism out of the box, including multi-user and cross-device flows without custom scripting or fragile CI wiring required.

Selectable Browser Configurations

Test against any browser, version, and viewport

QA engineers can choose Chrome, Firefox, or Safari WebKit, and configure the viewport for responsive layouts or mobile-web apps.

The QA toolchain designed to harness AI

Interactive Live Browsers

Live execution you can watch and interact with

We use WebRTC to render the remote test browser directly in the Console with sub-second latency. You can intervene mid-run to retry a step, adjust an input, or probe a flaky selector—and the AI agent can do the same. The result is quicker triage, clearer shared context for non-technical teammates, and shorter paths from failure to fix.

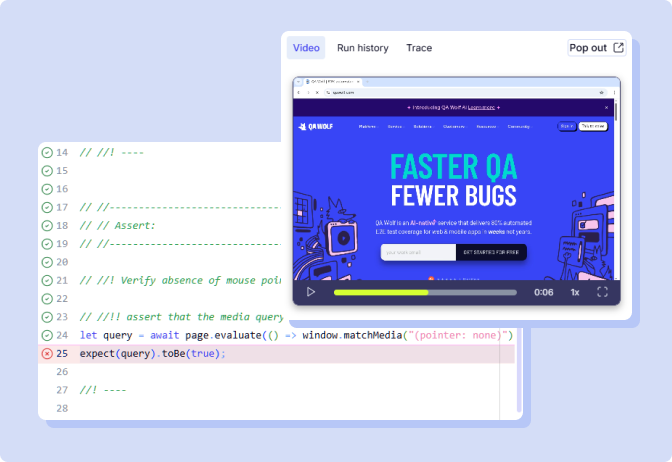

Line-by-Line Execution

Fix a step without starting over

The test code and browser share the same runtime, so you can run and re-run just the lines you’re working on without restarting the whole test. Because you’re coding in the same environment that executes in CI, you can adjust selectors, re-check assertions, or advance the flow one step at a time—without any drift between local and CI.

Detailed Telemetry and Reporting

See what failed, why it failed, and where to fix it

Every run includes a unified, time-aligned record of the test with video, logs, traces, network requests, and system state. Automatically generate and assign a bug report with a link to all the necessary artifacts for QA, devs, and PMs.

Test infra like nothing you've seen before

DIY on CI runners

GitHub Actions, CircleCI, Jenkins

Hosted browser clouds

BrowserStack, SauceLabs, Firebase, AWS device farm

Test isolation

Multiple tests per runner

Isolated session shared capacity

One test per container

Parallelization

Limited to available runners

Concurrency limited by plan

100% by default

Start up time

Cold start 1-4 min

Variable by provider

2-10 sec

2-10 sec

Pre-warmed <500ms

Interactive runners

None

None

Always

Live execution view

None

None

Always

Test run orchestration

Self-maintained YAML

Test-level ordering only

UI generates suite-level DAG

Artifacts & telemetry

None by default

Logs by default; video limited by plan

Video, traces, logs, shareable links

DIY on CI runners

GitHub Actions, CircleCI, Jenkins

Test isolation

Multiple tests per runner

Parallelization

Limited to available runners

Start up time

Cold start 1-4 min

Interactive runners

None

Live execution view

None

Test run orchestration

Self maintained YAML

Artifacts & telemetry

None by default

Hosted browser clouds

BrowserStack, SauceLabs, Firebase, AWS device farm

Test isolation

Isolated session shared capacity

Parallelization

Concurrency limited by plan

Start up time

Variable by provider 2-10 sec

Interactive runners

None

Live execution view

None

Start up time

Test-level ordering only

Artifacts & telemetry

Logs by default; video limited by plan

Test isolation

One test per container

Parallelization

100% by default

Start up time

Pre-warmed <500ms

Interactive runners

Always

Live execution view

Always

Start up time

UI generates

suite-level DAG

Artifacts & telemetry

Video, traces, logs, shareable links